Reasoning

I love being a dad.

I remember the very first moment I realized that this creature that I had helped create was a different kind of animal than I was familiar with.

Growing up on the ranch, I was blessed to have a wonderful family dog.

Mooney was a pure bred Chesapeake Bay retriever that I had been gifted as a pup from an owner after I had trained his father for a dog show in basic obedience.

Mooney was an amazing dog. He is, to this day, the best fetching dog I've ever worked with.

I could hit golf balls out into the field behind our ranch house and Mooney would fetch them all. I could hit three in a row and he would fetch them in order.

Once I threw a rock in the middle of a pond and he swam diligently out to the middle, dove down, and returned with a rock.

To this day, I'm not sure it was the same rock, but it didn't matter. He loved to fetch.

And more importantly, he loved to please me.

But when kids come into your life, there is just something different.

These little animals have an innate curiosity and decision-making that is perfectly human.

It is the ability to understand consequences and internal argument that define us as a species.

So now we have AI systems that are being referred to as "human like" and are capable of reasoning.

What exactly does this mean? Let's break it down.

What is all the fuss about?

In the past several weeks, there has been increased attention on the AI reasoning models.

Although OpenAI's o1 model has been out for sometime, it was the release of DeepSeek's R1 model that prompted all the international headlines and the temporary collapse of tech stocks.

The R1 model is a reasoning model meaning that it can analyze a problem, develop an approach to solving the problem, internally debate the answers until it is satisfied, and return a very well researched and thought out answer.

The financial issues all stemmed from the fact that it has been widely reported that DeepSeek was able to accomplish this programming and training feat for a fraction of the cost of other tech companies like OpenAI

This sent shares of chip makers (which had dreams of huge data centers filled with expensive components) into decline and created questions about the level of spend that AI companies were devoting to training.

There is a lot to unpack here, but things are not always what they seem.

It is likely that the low figure quoted by DeepSeek as the development cost didn't consider many other requirements needed to build a large language model including basic technology infrastructure.

This is not even accounting for distillation. More about that in the callout.

Why is reasoning important?

In a very simplistic view, reasoning allows AI models to think through problems in a logical, stepwise manner.

Reasoning models employ a "chain-of-thought" approach, which involves breaking down complex problems into smaller, manageable steps. This process allows the AI to "think" through a problem systematically, much like a human would.

For example, when solving a math problem, the model might outline each step of the calculation, explaining its reasoning along the way.

Many reasoning models, such as DeepSeek-R1, combine chain-of-thought reasoning with reinforcement learning. This approach allows the model to learn from trial and error, improving its problem-solving abilities over time without explicit human guidance.

These models also exhibit a form of "meta-cognition" or "thinking about thinking." This capability allows them to verify and check their own reasoning processes, leading to more reliable and explainable outputs.

The best way to learn is to just use it

I think the best way to experience the difference in LLMs is by actually testing the model with a real-world problem.

One thing that I have learned is that prompting these models is a little different from what you might use for traditional ChatGPT-style models.

Here are a few rules:

1. Use minimal prompting for complex tasks. Rely on zero-shot or single-instruction prompts, allowing the model's internal reasoning capabilities to work independently.

2. Encourage more reasoning for complex tasks by explicitly instructing the model to spend more time thinking. This approach has been shown to improve performance on challenging problems.

3. Avoid few-shot prompting, as it consistently degrades performance in reasoning models. Limit examples to one or two at most.

4. For multi-step challenges, leverage the model's built-in reasoning capabilities rather than providing step-by-step instructions.

5. Keep prompts clear and concise, especially for tasks requiring structured output like code generation.

6. Use the "Let's think step by step" prompt to generate reasoning chains for complex problems.

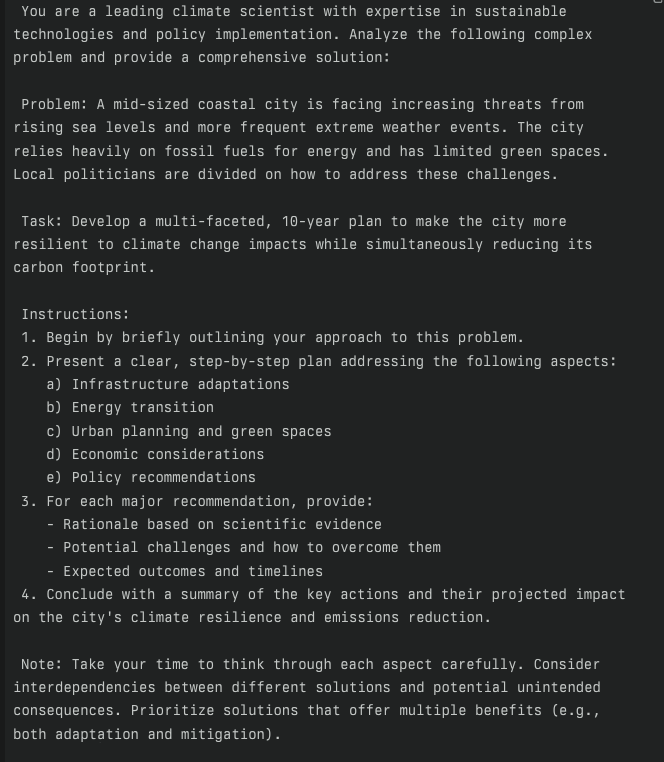

Let me show you how this would look in practice. Here is an optimized prompt for a reasoning model:

Just take this prompt framework, substitute a problem you might face in your business, and see what kind of output you can create!

It is incredibly easy to access these more complex models today.

DeepSeek R1 currently is available for free just by signing up, although this will probably change at some point.

The o1 model from OpenAI has been one of their most expensive models, but just in the past few days they have released o3-mini.

The o3-mini model is OpenAI's latest reasoning model, released on January 31st. It is a scaled-down version of the full o3 model, optimized for performance and cost efficiency while maintaining core reasoning capabilities. It is being rolled out to all users, so look for this in your ChatGPT account soon.

My preferred way to access these reasoning models is through Perplexity. Although these models are new, there is still a limit on the recency of their training data.

By combining the model with Perplexity, you can do background research, use it to load up the model with very current information, and then perform complex reasoning tasks.

I'll show you how in this video.

I hope I've given you a reason to give these models a try.

Member discussion